Summary of Meta’s Admission on Facebook Groups Suspension

Overview

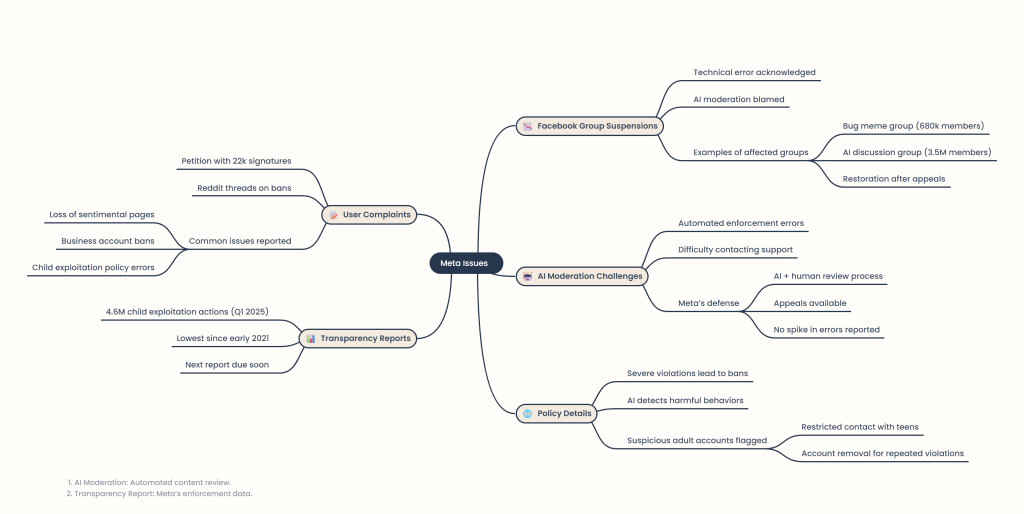

Meta has acknowledged a technical error that led to the wrongful suspension of Facebook Groups. However, the company denies that this issue reflects a broader problem across its platforms. Users have reported receiving automated messages indicating policy violations that resulted in their groups being deleted.

Issues with Facebook Groups

Automated Suspensions: Group administrators have reported receiving incorrect notifications about violating community standards, leading to the suspension of their groups.

Restoration of Groups: Some groups, including a meme-sharing group with over 680,000 members, were removed but have since been restored after the error was recognized.

User Experiences: An admin of a large AI group (3.5 million members) reported a temporary suspension of both the group and his account, which Meta later admitted was a mistake.

User Reactions and Petitions

Growing Concerns: Thousands of users have expressed their frustrations regarding mass suspensions on Facebook and Instagram. A petition titled “Meta wrongfully disabling accounts with no human customer support” has garnered nearly 22,000 signatures.

Shared Experiences: Users have taken to Reddit to share their experiences, highlighting the loss of access to pages with sentimental value and business accounts. Some users reported being banned for severe allegations, such as child sexual exploitation, which they claim were unfounded.

Meta’s Response

Denial of Widespread Issues: Meta stated that it has not observed a significant increase in erroneous account suspensions across its platforms.

Appeal Process: The company emphasizes that users can appeal suspensions if they believe a mistake has been made.

Use of AI in Moderation: Meta confirmed that AI plays a central role in its content review process, detecting and removing content that violates community standards before it is reported. However, human reviewers are involved in certain cases.

Transparency and Reporting

Community Standards Enforcement Report: Meta publishes a report detailing the actions taken against violations of its policies. The most recent report indicated a decrease in actions taken against child sexual exploitation content.

AI’s Role in Policy Enforcement: Meta’s policies include stringent measures against severe violations, such as posting content related to child sexual exploitation, which can lead to immediate account removal.

Conclusion

While Meta acknowledges a specific technical error affecting Facebook Groups, it maintains that there is no widespread issue with its enforcement practices. The company continues to rely heavily on AI for content moderation, which has drawn criticism from users experiencing wrongful suspensions.